Two pioneers of artificial intelligence – John J. Hopfield and Geoffrey E. Hinton – won the Nobel Prize in physics for helping create the building blocks of machine learning that is revolutionizing the way we work and live but also creates new threats for humanity.

The two Nobel Laureates used tools from physics to develop methods that are the foundation of today’s powerful machine learning. John Hopfield created an associative memory that can store and reconstruct images and other types of patterns in data.

Geoffrey Hinton invented a method that can autonomously find properties in data, and so perform tasks such as identifying specific elements in pictures. When we talk about artificial intelligence, we often mean machine learning using artificial neural networks.

The technology was originally inspired by the structure of the brain. In an artificial neural network, the brain’s neurons are represented by nodes that have different values. These nodes influence each other through connections that can be likened to synapses and which can be made stronger or weaker.

The network is trained, for example by developing stronger connections between nodes with simultaneously high values. The laureates have conducted important work with artificial neural networks from the 1980s onward. John Hopfield invented a network that uses a method for saving and recreating patterns. We can imagine the nodes as pixels.

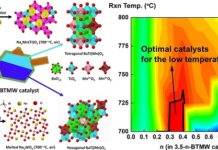

The Hopfield network utilises physics that describes a material’s characteristics due to its atomic spin – a property that makes each atom a tiny magnet. The network as a whole is described in a manner equivalent to the energy in the spin system found in physics, and is trained by finding values for the connections between the nodes so that the saved images have low energy.

When the Hopfield network is fed a distorted or incomplete image, it methodically works through the nodes and updates their values so the network’s energy falls. The network thus works stepwise to find the saved image that is most like the imperfect one it was fed with.

Geoffrey Hinton used the Hopfield network as the foundation for a new network that uses a different method: the Boltzmann machine. This can learn to recognise characteristic elements in a given type of data. Hinton used tools from statistical physics, the science of systems built from many similar components.

As per the press release, the machine is trained by feeding it examples that are very likely to arise when the machine is run. The Boltzmann machine can be used to classify images or create new examples of the type of pattern on which it was trained.

Hinton has built upon this work, helping initiate the current explosive development of machine learning. “The laureates’ work has already been of the greatest benefit. In physics we use artificial neural networks in a vast range of areas, such as developing new materials with specific properties,” said Ellen Moons, Chair of the Nobel Committee for Physics.